<think>

See also my Large Language Models section.

...

there you can download the abliterated models

I'm using locally(!).

I've another page about a nice topic here; w/ (emergent) swarm intelligence; see my Cellular Automata page (TODO).

These are only my own favorites.. there are others, too; but I don't really like using public versions.. only sometimes.

Gemini Google ChatGPT OpenAIThese three are the apps I've already tested before.

LM Studio llama.cpp ollamaThese ones were not tested by me.. I've only heard about them. Maybe good?

Jan vllm msty mlx-lmI really like running LLMs on my local machine, or smth. not accessable by others. Even if I'm pretty sure, E.T./A.I. phone home... xD~

Open LLM LeaderboardHere are my favorite models (currently)

...

sorted by date/...randomly!

...

My Tipp: See also the abliterated models section below (with download links)! ...

Here are some abliterated models @ Hugging Face (please explicitly search for 'em there).

JFYI: Now you can get a copy of my abliterated models here!

failspy huihui-ai mlabonne mradermacher nicobossIt's about uncensoring LLMs ... the "easy" way (in a "global" form, not as usual by changing the prompt(s)). Find out more here:

Uncensor any LLM with abliteration #1 Uncensor any LLM with abliteration #2 Refusal in LLMs is mediated by a single direction #1 Refusal in LLMs is mediated by a single direction #2 Demo of bypassing refusal LLM Abliterate v1.4 script, adapted for Mistral-Small-Instruct-2409 `remove-refusals-with-transformers`I collected the interesting links (for myself) while surfing the web..

They aren't sorted in any priority or smth. like that ...

they're just sorted by date!

robots.txt) Unsloth Docs How Transformers Think: The Information Flow That Makes Language Models Work How LLMs Choose Their Words: A Practical Walk-Through of Logits, Softmax and Sampling A visual introduction to machine learning Model Tuning and the Bias-Variance Tradeoff Language models can explain neurons in language models Transformer Explainer Prompt caching: 10x cheaper LLM tokens, but how? [YouTube] Andrej Karpathy DreamGen What Is ChatGPT Doing … and Why Does It Work?

I don't know how this is scaling in real, but the idea itself seems interesting!

See (w/ it's own GitHub repository).

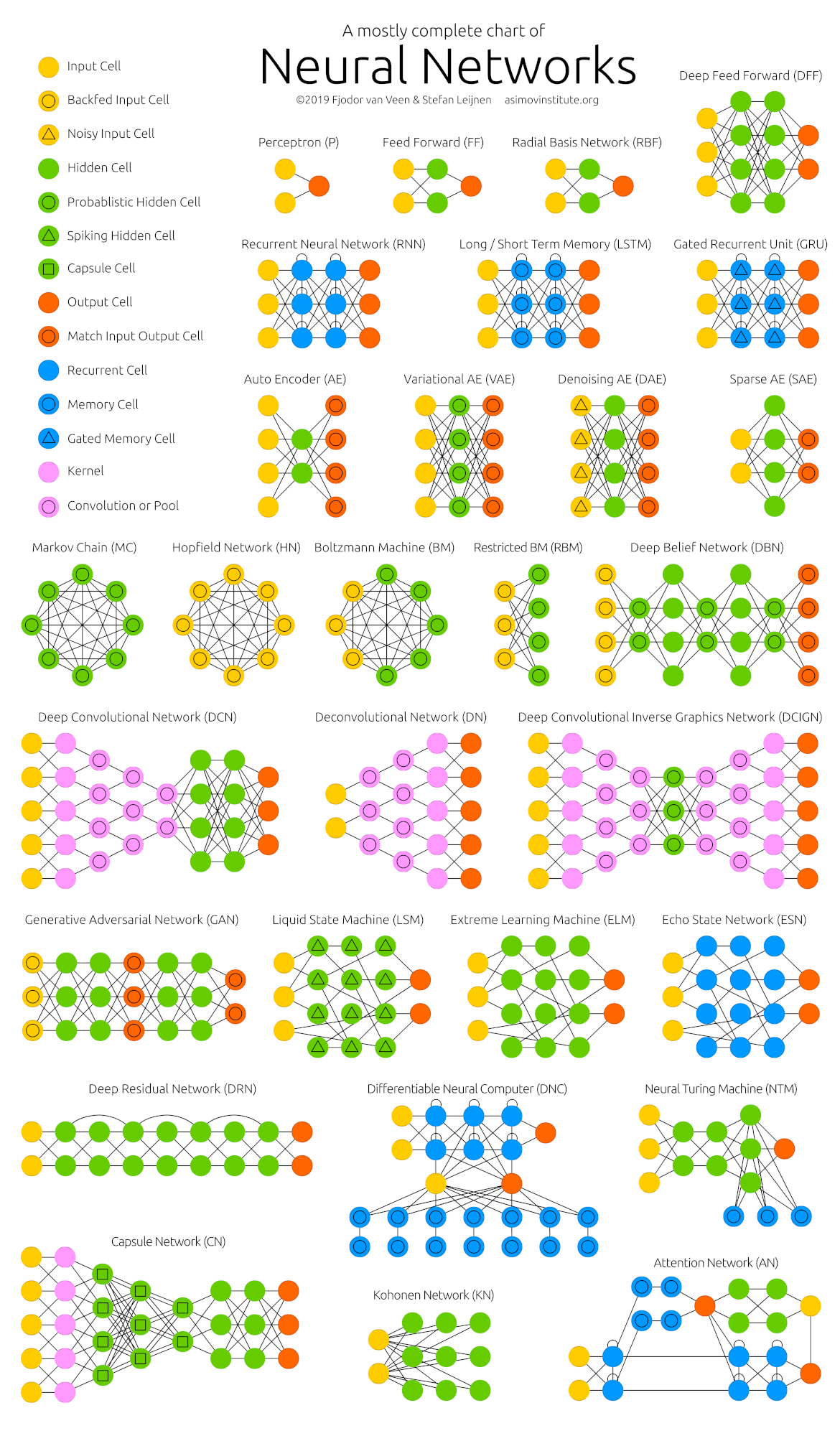

The official link is above, in the list of Links. I don't know all these types of neural networks, but it looks like an interesting overview.

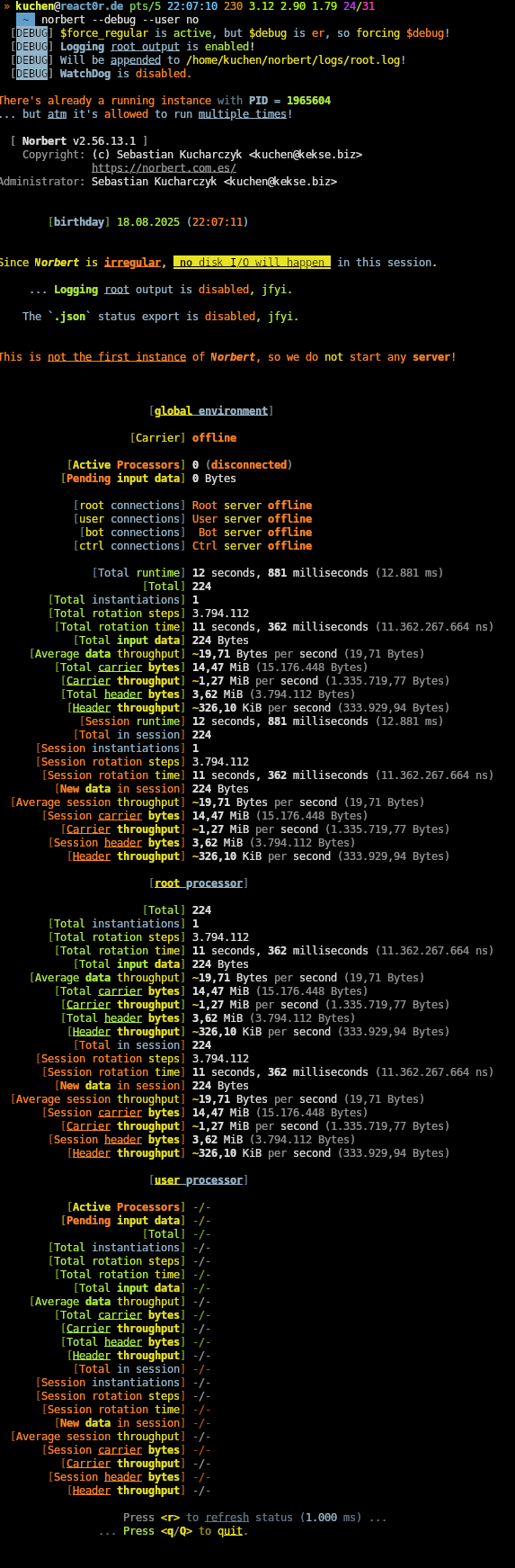

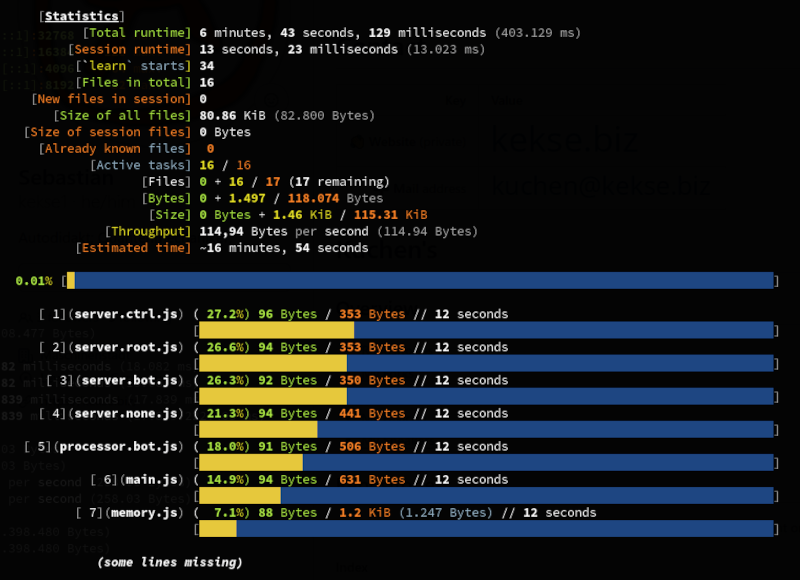

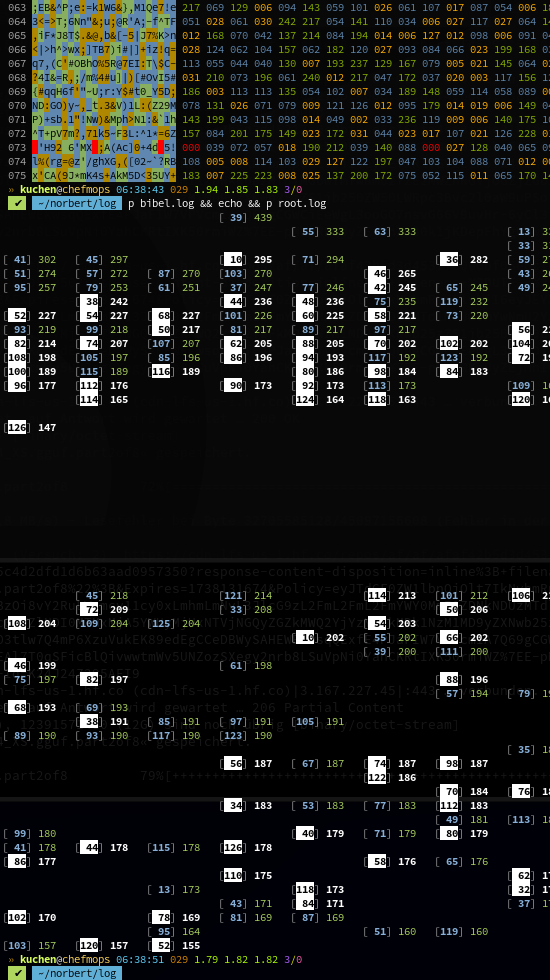

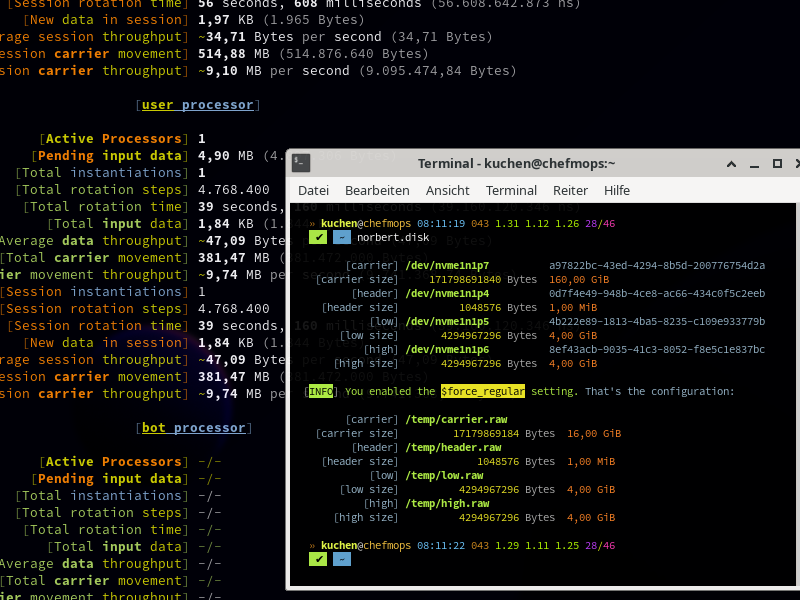

This is my very own artificial intelligence.

It's totally based on pure byte code, nothing with tokens or smth. like it (like current LLMs are based on).

And such input is processed abstract. The last output is then also injected once again as second, parallel input byte

(some feedback loop).

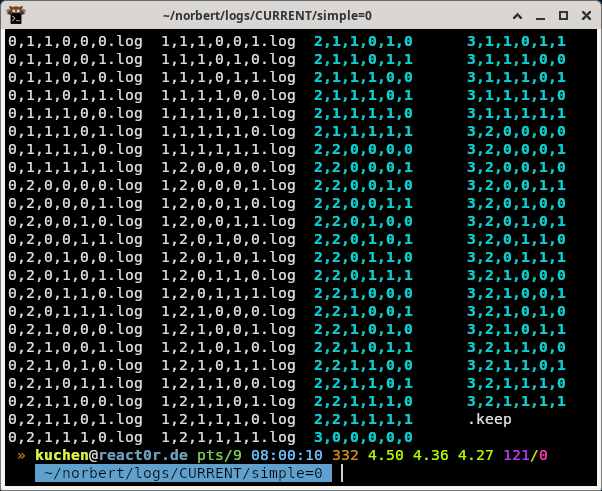

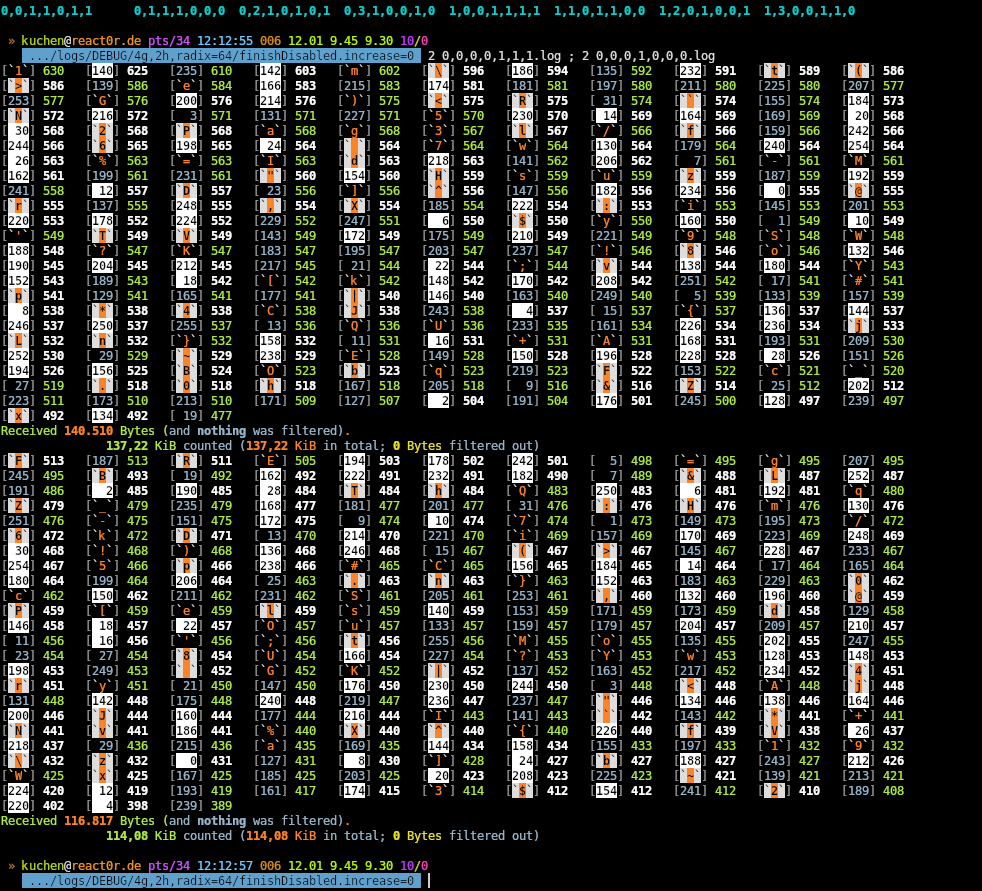

And here are some screenshots of the process itself and two helper utilities I've created especially for this reason; also my dump.js.